Based on our recent discussions – 80% of the problems that cause War Room scenarios are caused by only about 20% of problem patterns. Most of them are related to performance or architectural issues in the application or in the infrastructure that supports your application. A recent study concluded that 80% of development time is wasted with triaging and fixing problems which sum up to an estimated $60B of annual costs in the US alone. Now – whether you believe these numbers or not – if you have ever spent your evenings or weekends in a war room trying to fix yet another production problem or getting the final tasks for the release out of the door you need to change the way you develop and deploy software.

In the last weeks we had many great conversations with the DevOps community at events such as JavaOne (download slides), Star West (download slides), Web & PHP, and our Dynatrace PERFORM User Conference. We have even spoken with folks like Kevin Behr – one of the authors of The Phoenix Project. They all confirmed that these war rooms consume most of their engineering time despite their efforts of a leaner and continuous delivery model. The consensus was that DevOps needs to include a focus on performance and user experience to ensure happy and productive end users of the delivered software. But what does this mean exactly and what needs to be changed?

Cultural Shift: “Connect” your Teams

Looking at the top reasons why applications are slow, crash, or why development teams spend so much time on fixing problems rather than pushing out more changes more frequently, it is obviously that there is a disconnect between teams. Performance requirements are not well understood by all teams involved and there is no automated way to measure the success or failure of work that has been done. To fix this we have a couple of ideas that already work well for our customers. It will help you to align the goals off all teams involved in order to not end up with epic failures like the following pictures:

Extend your Requirements

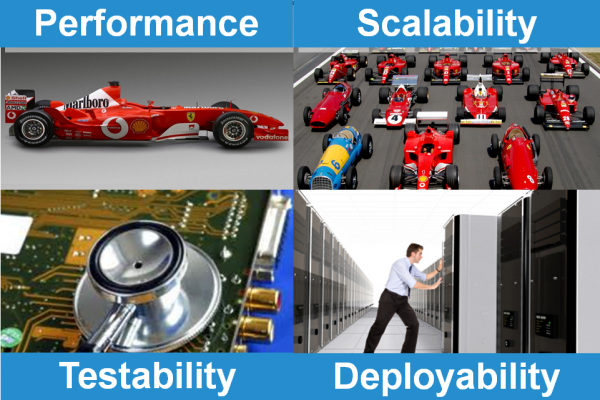

The top problems show us that most problems causing issues are related to application performance and scalability issues. A best practice, therefore, is to add performance and architectural requirements to the functional requirements of your agile development tasks/stories, e.g: 100 Users must be able to execute the new search feature and get a response within 1 second. An architectural/scalability requirement would be that the application is able to scale horizontally by adding additional servers and with that handle the additional load without impacting response time.

One of the reasons why these problems are not found earlier is because testability is yet another requirement that is missing when implementing new features or changes. Changes to the software often break test scripts and it is too hard for the testing teams to maintain them. That’s why testing either happens too late or is just done manually because it can’t be automated. Making developers write applications that are easier and testable (e.g: making sure that the user interface uses IDs for fields that don’t change even when the layout changes or that they do not change URL patterns or REST interfaces from build to build) increases testability and allows early on testing.

Being able to deploy an application or a new feature easily is also key in automating testing of performance, scalability, as well as optimizing the speed of deployments. Deployability is therefore another new requirement that needs to be taken into account. Developers need to help Testers and Ops to develop automated ways to deploy software components which reduces test environment setup time as well as improves time and quality for production rollouts.

Tighten Collaboration

When we talk about “The Wall” between the Teams (Dev, Test, Ops, Business) – most industry professionals we have conversed with understand and recognize the concept. So – these walls exist – and we have to tear them down. The best way to start is by inviting Testing and Operations to your daily development Stand-Ups. Let them listen in what you are currently working on. Invite them to your sprint reviews and planning meetings so that they see what is coming down the pipeline. Listen to their feedback and include them in tasks such as “Building an automated deployment option for feature XYZ” or “Making a new REST Interface TESTABLE”. Listen to their feedback when it comes on architectural decisions, e.g.: Which database to use or what and how to log information.

Developers can learn from Testers and Ops because they have Know-How on performance, scalability and deployment scenarios. On the other side Testers and Ops can learn from developers by building automated deployment tools for their test and production environments and with that lowering the amount of work they have to do for each now test or production deployment.

Working closely together allows the teams to discuss tools to use to measure the performance and scalability of new features as well as tools to use to collect diagnostics information or information requested by the business to measure success. Sharing these tools across all teams increases acceptance of the measured values and improves collaboration when these results are shared.

Measure KPIs for all Teams

We can only measure progress, success, and failures if we have facts. In our world, we talk about measurements that tell us more about the quality of work and the impact it has on other teams. Code Coverage as an example is a metric interesting for developers and testers as it tells them how good their test coverage is. It is also a good indicator for Ops as they can be more confident if code coverage is at least as high as at the recent successful deployment. Measuring and communicating these measures across all teams is important as it helps teams to understand the impact of their work on other teams’ objectives but also gives them confidence that the work done by other teams allows them to achieve their goals.

From a development perspective, we want to avoid these top problem patterns we talked about. If we know that executing too many DB queries per request is a problem we should simply measure the number of DB Executions for all Unit and Integration Tests. It is an early warning signal for engineering when SQL Count increases due to a recent code change so that they can look into why that happened unintentionally. Providing these metrics to Ops and telling them that even though you added new features you did not increase traffic on the database will give them more confidence that they don’t need to scale up the database servers.

For Ops it is important to have successful and fast deployments. So the time for a new deployment is a very relevant metric that is heavily impacted by new features and architectural choices of engineering. If deployment time increases they need to communicate to engineering that this is not acceptable as it causes too much downtime.

Measuring these values across all teams and communicating them is key to avoiding problems early on:

Automate, Automate, Automate

DevOps promises more releases in a short timeframe in order to adapt to problems or changes in the market faster. In order to become faster, it requires a high level of automation. Every manual task is taking time away from this goal. Therefore it is important to automate all the mentioned areas in this article whether it is automated performance and scalability tests, automated deployments, and the ability to automatically scale up and down. It also means that the metrics defined by each team can be automatically measured and automatically communicated and shared with everybody that relies on them to make decisions.

It is therefore time to invest in testing tools that can be integrated in the continuous integration process by providing automatic results on performance (e.g.: Response Time) and architectural metrics (Number of SQL Statements). It requires tools that allow automatic bundling and deployment as well as automatic rollbacks in case things to wrong. All tools that are used or purposely built need to report the measures of success or failure to a central and easy accessible location – whether it is a shared Wiki or a ticketing system.

Next Steps

How do you get started? The best is to identify the root causes of your current war room scenarios. Then figure out how you can identify these problems already in development. Which measures do you need to look at to identify these performance or architectural issues? Which tests do you need to capture these mistakes and how can you monitor your code to capture these problems and report them automatically?

In the next couple of blog posts we will be looking at specific problem patterns, how they impact end users if they end up in production code, how they can be identified early on in development and testing and how to quickly identify and fix them in case these problems still make it into a deployment.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum